How interviews could be misleading your admissions process

November 21, 2020

The academic admissions process is generally broken down into two categories: 1) academic performance, and 2) personal and professional characteristics.

Academic performance for admission into professional education programs is measured through GPA and scores on standardized tests, such as MCAT for medical school, GMAT for business school and GRE for other graduate-level programs. GPA and cognitive scores on these standardized tests have been proven to be valid assessment tools. But the process for assessing personal and professional characteristics is not as robust. These qualities are typically measured by reference letters, personal statements and, the most widely used method, interviews.

Most professional education programs require applicants to participate in an interview as part of the admissions process. These interviews are intended to assess characteristics like work ethic, ambition, creativity, empathy and kindness, along with motivation and problem-solving skills.

Most schools consider the interview an important portion of their admissions process, hence a considerable amount of faculty time is dedicated to both arranging and conducting the meetings. But the time cost doesn’t necessarily mean that the interviews are effective. To be effective, the interview results must demonstrate both high reliability and validity. As it turns out, they rarely do.

What do the first studies tell us about interviews?

Research on interviews first looked at the medical admissions process. Back in 1981, medical schools hadn’t established a way to evaluate the effectiveness of their own interviews. Instead, they relied on academic literature to demonstrate their reliability and validity — literature that didn’t offer conclusive evidence. A study by Puryear and colleagues from that same year couldn’t even describe the factors that interviews specifically measure.

Results from another study in 1991 added to the evidence that interviews have questionable reliability and validity. The authors advised that unless schools were willing to spend a substantial amount of time and resources to train interviewers and construct highly structured interview formats, they should consider dropping interviews as an admission tool all together. And, they warned, even if schools did decide to take this course of action, they could not be assured that the interviews would make a difference in the admissions process.

Unstructured interviews are particularly flawed

A few years ago, the University of Texas Medical School was required by the State Legislature to accept a total of 200 students into their program after they already accepted their usual 150 students and after all other top-ranked candidates had been spoken for by other schools. The school only made an offer to seven additional applicants. Researchers found there was no difference in in-program performance between the applicants who were initially accepted and those who were initially rejected. But why did the rankings of these applicants differ so much? It turns out it was interviewers’ perceptions of the candidates in unstructured interviews.

These unstructured interviews are also quite common in other professional education programs and in the workforce. For example, a sociologist had interviewed bankers, lawyers and consultants on their recruitment practices and discovered that they typically looked for candidates who were just like them.

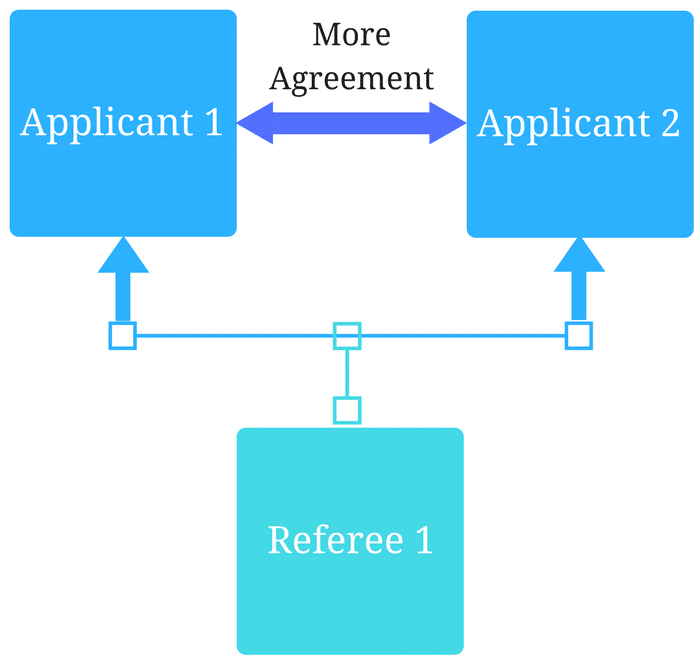

Though more structured interviews demonstrate more reliable judgements, generally speaking, reliability of interviews remains low. There tends to be low agreement between interviewers in their evaluation of candidates. Additionally, several studies have shown that applicants are not consistent in their performance across multiple interview sessions. If interviews aren’t reliable, then they’re not valid, and their utility in assisting the admission process is being greatly compromised.

Recent research still shows interviews have low reliability and validity

Reliability is the ability for the measurements of a tool to be reproducible; for interviews, this means that an applicant’s scores should remain similar if the interview is repeated. If an interview is repeated however, there are many extraneous factors that could affect whether an applicant’s scores are similar to those from their first interview. These factors include having a different interviewer, using different interview questions in each interview, whether the candidate is being interviewed earlier or later in the lineup of candidates, if they’re interviewed before or after a high- or low-performing candidate, and so on.

Kreiter and colleagues found that despite the carefully developed structure of the interviews used in their study, only low to moderate reliability was found for interviews, and they lack the psychometric precision necessary to be used as a highly influential admission tool in the academic admissions process.

Over time, using well-trained interviewers and more structured interviews has increased the reliability of interviews, but its increase has not been sufficient enough to increase their validity. In fact, interviews have consistently failed tests of validity, and they’ve failed to predict performance on objective structured clinical examinations (OSCE) for medical schools and licensure exams. One notable exception is the structured Multiple Mini-Interview (MMI), which has been shown to predict performance on Part I and Part II of the Medical Council of Canadian Qualifying Examination (MCCQE), but because it requires a circuit of several short assessments with multiple interviewers, it’s better suited for smaller applicant sizes.

By comparing the medical school admissions process of 1986 with that of 2008, Monroe and colleagues found that interviews had become the most important factor in deciding who to accept. Yet, they’re the costliest and most time-consuming portion of the admissions process, and they offer low to moderate reliability, and even lower validity. And because scores from interviews mostly reflect individual perspectives and variations in the interviewer and the context of the interview, their use as a predominant element of the admissions process calls into question the fairness of decisions made by schools.

A better way forward

Acuity admissions assessments can supplement or potentially replace outdated admissions tools to enable defensible selections. Casper, our online open-response situational judgement test, is backed by over 15 years of research and provides admissions teams with a score that more accurately reflects an applicant’s personal and professional attributes. Admissions teams can see a more complete picture of their applicants and make more informed decisions, whether it’s determining final selections or prioritizing applicants for the limited structured interview spots.

Interested in understanding how this types of assessment may improve your process? Check out:

How situational judgement tests can benefit your admissions process

Originally written in 2017 by: Patrick Antonacci, M.A.Sc., Data Scientist

Updated in 2020 by: Andrea Coelho, Sr. Content Marketing Manager

Updated in 2022 by: Leanne Roberts, Content Writer

Related Articles

Reference letters in academic admissions: useful o...

Because of the lack of innovation, there are often few opportunities to examine current legacy…

Why personal statements in academic admissions are...

Because the kind of information collected through personal statements varies, there is a limited amount…