Measurements for the success of assessment tools part I: reliability

May 26, 2019

Competitive academic programs use a variety of admissions tools to evaluate candidates and decide who to accept. For these admissions assessments to be effective, the first step is demonstrating they are reliable. The concept of reliability is a fundamental psychometric measure of the reproducibility of assessment results. The more error in a tool, the less likely an assessment will give the same score for the same person, and the less reliable it is.

A perfectly reliable test would give the same score each time to each candidate. A perfectly unreliable tool would be a random number generator. Obviously, a test needs to be more than only reliable. But a tool that isn’t sufficiently reliable has no chance of being useful. Reliability is necessary, but not sufficient to make a quality assessment tool.

In the real world, all assessment tools have measurement error. There are various types of errors. Using different reliability measures, we can quantify each and assess the overall reliability of an admission assessment tool. Here we look at a few different types of reliability measures and the errors they address:

Test-retest reliability

Test-retest reliability is used to measures the error associated with different variants of the same assessment (typically the differences in question sets and rating). This reliability is measured by giving a different version of the test to the same group of people twice within a short period of time, under similar conditions. The results from each test are then compared to generate the test-retest reliability. This type of error can be compensated for by normalizing applicant scores within test versions, like using Z-scores or percentile scores.

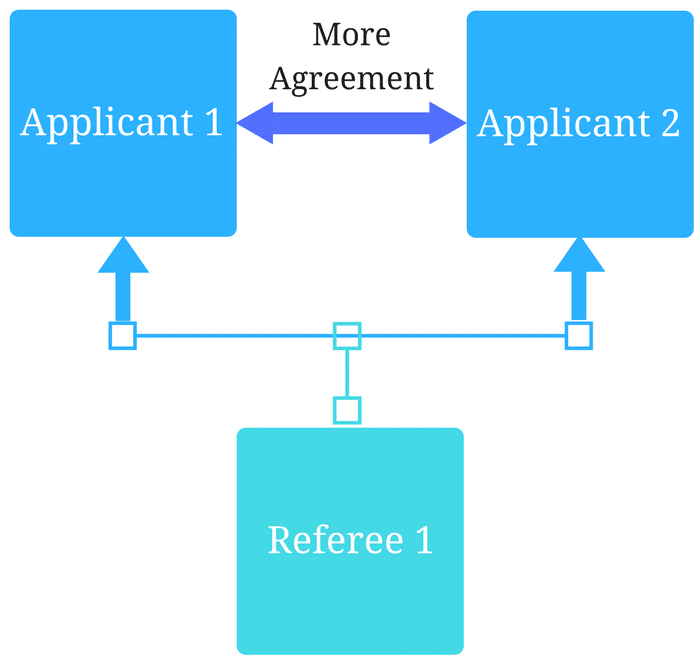

Inter-rater reliability (IRR)

IRR is the level of consensus in scores generated by people evaluating the same test. This is measured by giving the same responses to different raters and measuring the differences in their scoring. If the scores vary widely from examiner to examiner, then the reliability of all scores is called into question. However, a high inter-rater reliability means that the raters have a high level of agreement. In more subjective assessments, a high, but not perfect, inter-rater reliability may be desirable if it allows for a variety of opinion.

Internal consistency

Internal consistency examines similar elements (subsections) of an assessment and if they produce similar outcomes. For example, to what degree do two test questions, both measuring reading comprehension, produce similar results for the same applicant? Internal consistency (often measured by Cronbach’s Alpha α) measures the error associated with using multiple questions to evaluate the same concept. In the field of psychometrics, the generally accepted internal consistency needed for a high-stakes admissions tool should be above α = 0.7 to be useful, however, if the internal consistency is too high (α > 0.95), it may be a sign of subsection redundancy.

For a high-stake admissions tool to be effective, all of the above types of reliability are important and are interrelated. If an assessment has a low internal consistency, it means that it is likely measuring unrelated characteristics of the applicant, and will usually result in low inter-rater and test-retest reliability. Thus, good internal consistency is necessary for a tool to be effective at measuring the similar qualities of an applicant.

Unfortunately many assessments, even those historically favored to assess for non-cognitive characteristics (see: Reference Letter Blog, Personal Statement Blog), tend to fare poorly in reliability, and their results should be viewed skeptically.

While reliability is necessary, it is not sufficient. An admissions screening tool also needs to have “predictive validity”, in that it predicts for some useful future outcome.

Reliability is frequently used as a first screen as it can be collected quickly and relatively easily, whereas validity requires time for outcomes to develop. As predictive validity is never possible if reliability is not first present, reliability is always the first step in assessing new or existing academic screening tools.

By: Patrick Antonacci, M.A.Sc., Data Scientist

Related Articles

How interviews could be misleading your admissions...

Most schools consider the interview an important portion of their admissions process, hence a considerable…

Reference letters in academic admissions: useful o...

Because of the lack of innovation, there are often few opportunities to examine current legacy…