7 Assessment Form Design Pitfalls in Medical Education and How to Avoid Them

July 17, 2025

Even the best-designed assessment form design strategies in medical education can stumble on subtle but significant design flaws in their forms. Whether you’re in undergraduate medical education, a clinical teaching program, or another health professions school, poorly structured forms can undermine data quality, frustrate evaluators, and slow down decision-making. Below are seven of the most common pitfalls we’ve seen and tips on how you can steer clear of them.

1. Inconsistent Rating Scales

The Problem:

Mixing Likert scales (e.g., 1–5 vs. 1–7) or using different rubric criteria within or across forms makes it challenging to compare or aggregate data meaningfully.

The Fix:

- Standardize scale definitions across all forms, ideally using a central template.

- Provide faculty training on interpretation to reduce variability.

- Align thresholds within each scale type, not across all forms. For example, a “3” may indicate competence on a developmental scale, while entrustment decisions often require a “4.” Focus on consistent definitions and faculty training to ensure fair, interpretable results.

2. Forms That Are Too Long

The Problem:

Lengthy forms cause fatigue, leading to rushed or incomplete responses, especially on mobile devices. They also increase the administrative burden of managing incomplete forms.

The Fix:

- Reduce items to a set of high-yield domains that align with program outcomes.

- Decide what needs to be sampled formatively vs. summatively.

- Aim for brevity, especially in workplace-based assessments.

- Use sampling strategies for didactic courses to reduce respondent fatigue.

3. Blurring Event vs. Faculty Assessments

The Problem:

Combining feedback on courses or clinical rotations with feedback on individual instructors muddies your data and weakens reporting for both program improvement and faculty development.

The Fix:

- Separate these assessments entirely — in both form structure and reporting metadata.

- Use distinct form templates and clear naming conventions like “Rotation Feedback – 2025” vs. “Faculty Assessment – Clerkship Seminars 2025”.

4. Unclear Instructions and Labels

The Problem:

Vague or missing instructions lead to inconsistent interpretations. Respondents may misunderstand how to use the rating scale or what a question is really asking.

The Fix:

- Use embedded instructions and contextual help text throughout your forms.

- Anchor every scale point with concise, behavioural descriptors (“Explains plan in plain language; checks for understanding”).

5. Mandatory-Field Overkill

The Problem:

Overloading your forms with “required” fields frustrates respondents and leads to lower compliance. Worse, forcing answers without a “Not Applicable” (N/A) or “Opt-Out” option can generate misleading data.

The Fix:

- Make only core questions mandatory — the ones tied to your critical learning outcomes or compliance reporting.

- Add explicit N/A or opt-out options so evaluators can skip irrelevant questions without compromising data quality.

6. Missing Version Control

The Problem:

When forms evolve without clear versioning, it becomes hard to track which data came from which version making analysis and longitudinal comparisons difficult.

The Fix:

- Include version numbers or academic years in your form titles (e.g., “Clinical Eval Template v2025.1”).

- Document any changes in a shared version control log. This supports clean data segmentation and defensible accreditation reporting.

7. No Feedback Loop for Form Revisions

The Problem:

If you’re not regularly reviewing and revising your forms based on actual user experience, small issues compound over time resulting in data drift, low engagement, or even faculty burnout.

The Fix:

- Institute a formal review cycle (annually or each term) and gather input from both faculty and learners.

- Use analytics on form completion rates and opt-out frequencies to guide revisions.

- A good rule: if a question is frequently skipped, it may not be useful.

Better Assessment Form Design = Better Medical Education Data

Great assessment data starts with great form design. By avoiding these hidden pitfalls and applying best practices drawn from the field, you can improve data quality, enhance evaluator engagement, and support better decisions across your health professions program.

→ Download the Assessment Form Design Checklist for MedEd to assess your current practices.

→ Want to see how One45 by Acuity Insights helps you build better forms out of the box? Book a demo today.

Related Articles

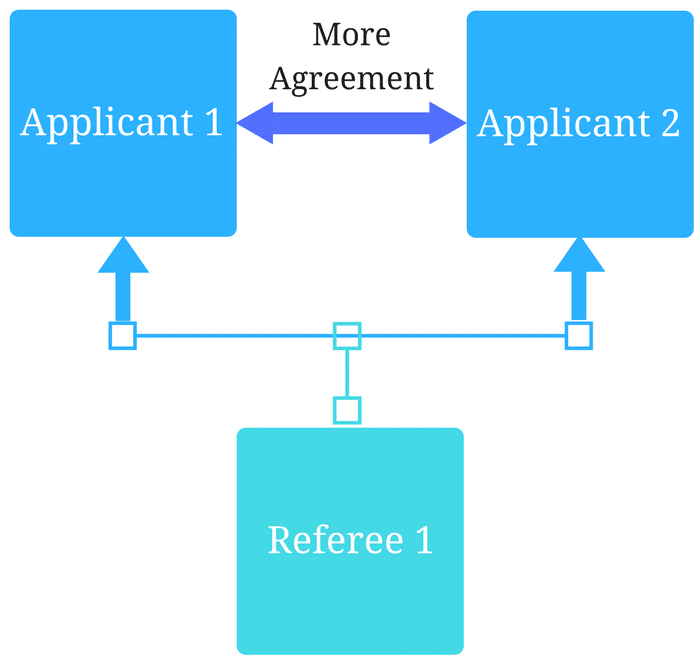

How interviews could be misleading your admissions...

Most schools consider the interview an important portion of their admissions process, hence a considerable…

Reference letters in academic admissions: useful o...

Because of the lack of innovation, there are often few opportunities to examine current legacy…