How healthy is your school’s data?

February 11, 2021

If you have ever been responsible for compiling a report on your school’s performance, you know how infuriating and time consuming the process can be if the data isn’t in great shape. Hunting down that one “hidden” spreadsheet your colleague has on their personal drive, fixing transcription errors, and deduplicating data from multiple sources are just a few of the harrowing things that need to be done to get an accurate reporting of your school’s performance.

Whether or not you’re planning on using a data warehouse to consolidate this data and help with reporting, taking stock of your school’s data “health” is critical to ensure you can continue making sense of the data you collect. Unfortunately, data quality is subject to entropy – becoming disorganized and unusable over time. Without a concerted effort to manage your data quality, over time it will become more difficult to use and less trustworthy for decision-making.

So – how healthy is your school’s data? What does good data even look like? Understanding this and the process to nurse your data back to health is critical for anyone struggling to report on data, make data-based decisions, or prepare data for use in a data warehouse.

Characteristics of quality data

The key characteristics of high-quality data are always consistency, accuracy, completeness, and conformity.

Consistent

Above all else, data should be consistent and look identical within its system of record and across all systems that hold the data. This is something that is best to automate with technology through data synchronization processes that remove transcription errors. Without consistency, it becomes very challenging to aggregate and link common records together across your systems. We see consistency problems most commonly with:

- Naming conventions across systems. For example, oftentimes a course will be referred to one way in one system and another slightly different way in a second system. These are usually easy to spot issues such as the word “and” replaced with an ampersand “&” in a course title. But these issues lead to problems automatically aggregating data associated with the records as you now need to account for every possible naming of the course when pulling this data.

- Unique identifiers. In order to accurately link records from one system to another, one or more attributes that are unique to that record must be present in all systems. Oftentimes, we see different unique identifiers used in various systems. For example, this occurs frequently with student records that use one type of student identifier in one system and another separate type of student identifier in another system. While students may very well have different student identifiers that you need to keep record of, only one must be used across systems that store student records to facilitate easy and accurate linking of these students.

- Terms and vocabulary. To reduce misinterpretation of data, standardized lists of common terms and vocabulary should be used in your data. This is most helpful for people analyzing data across multiple data silos or departments where each may have different terms to refer to something. For example, one course may refer to a final exam as a “Final Exam” while another course may refer to it as the “Year-End Exam”. This can lead to confusion and questions by others trying to interpret this data, such as “Are these the same kind of exam? What’s the difference between a year-end exam and final exam?” While this may seem pedantic, it cuts down on potential misinterpretations of the data. If a data analyst knows there’s a standardized list of exam terms, they can rely on it to interpret these terms correctly.

Accurate

While you may find your data that gets recorded in the “source of truth” system to be highly accurate, it’s common to see inaccuracies in other systems where the data might have originated from, or been duplicated to. Errors in data happen for many reasons:

- Transcription. When data has to be manually entered from one system (or spreadsheet) into another, it opens the door to inevitable data omissions, duplications, typos, or straight-up substitutions of one record for another. The fewer times a piece of data is manually transcribed, the less data accuracy will be a problem. For example, an administrator having to copy grades from an LMS (e.g., Canvas) into the registrar’s system (e.g., Banner) might miss a grade, or accidentally modify it before copying them over.

- Synchronization. Data updated independently in separate systems is a disaster waiting to happen. If data can be edited from more than one system where it is stored, modifications should always flow in one direction from system to system. Rules and processes should be in place to ensure the data is only edited in the originating system. Keeping this data synchronized to all downstream systems in a timely manner will reduce or eliminate discrepancies between systems. For example, if exams are administered and automatically scored using proctoring software, but their scores are adjusted in a separate system, then discrepancies are introduced between the source system and the downstream system which can lead to misinterpretation and questions of confidence in the data.

Complete

Quality data shows a complete picture of what it’s recording. Oftentimes, however, data may be recorded in systems without key attributes that would enable it to be used properly. Completeness is important for ensuring your data records have everything they need to be used. While some attributes of a record may be optional, errors can occur if they are treated as optional when in fact they should be considered as necessary and required. Issues with data completeness are found with:

- Unique identifiers. Sometimes, the aspects that could uniquely identify a record across systems are missing. For example, what uniquely identifies one course record from another? Is it just the name, or are there other factors such as subject code, course number, section code, and academic year that together form a unique identifier for that course? Without these unique identifiers, problems arise with preventing duplicates, as well as linking records (e.g., courses) from one system to another.

- Record changes. Some, but not all, records should keep track of how they’ve changed over time. For example, if a student retakes an exam or course to remediate a failing grade, should their original attempt(s) be kept somewhere in a system of record? Who needs access to these original scores, and who just needs to see the final attempt? Answering these questions will help clarify how records are being modified and adjusted in systems of record.

- Data silos. While there’s a host of problems with synchronizing data across systems, an equally daunting problem occurs when data is kept locked away in different departments (i.e., data silos). This hampers the ability of information to flow freely to those that need it and leads to disconnects where data in one silo may require or benefit from data in another silo.

Conformant

Ensuring data conforms to certain formats and ranges makes it easier to calculate aggregate measures (e.g., averages) without unexpected and inaccurate results.

- Data is in expected format. Adding text to a data attribute that expects to be a number will lead to issues when trying to run aggregate calculations on that attribute. Good quality data is backed with clear documentation around format requirements for each data attribute. For example, representing a letter grade in a data attribute meant for scores, or vice-versa, can lead to these types of issues.

- Data is within expected range. Similarly, data should be within expected ranges for a data attribute. Using the “score” example again – a score that is negative, or a percent that is well above 100 can cause problems when aggregating it with other scores. Similar to what was mentioned previously, good quality data is backed with clear documentation around expected range requirements.

- Data attribute is only used to represent one thing. An attribute about a piece of data (e.g., the first name that makes up part of a student record) should only ever represent one concept. For the sake of convenience, oftentimes an attribute is used to represent multiple different things (called “overloading”) when there is no alternative attribute available to keep track of this detail. This creates potential confusion for those analyzing the data, and could lead to wrong interpretation or wrong inclusion in reporting. For example, using a student’s middle name attribute to indicate whether or not they are on a leave of absence could lead some to question whether the value stored in the attribute is the actual middle name or leave of absence indicator.

Data quality is critical to making the best use of your data – whether it be monitoring key performance indicators, answering important questions, or making data-informed decisions. one of these is possible without first having a solid, trustworthy data source. Now that you may have identified opportunities for growth, how do you formulate a treatment plan for your data and its systems? Review our recommended treatment plan to make (and keep) your MedEd data healthy.

Related Articles

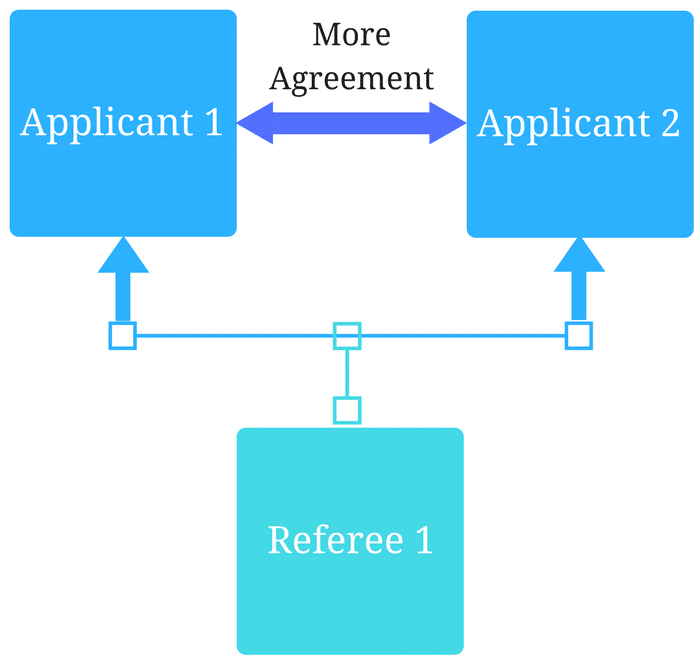

How interviews could be misleading your admissions...

Most schools consider the interview an important portion of their admissions process, hence a considerable…

Reference letters in academic admissions: useful o...

Because of the lack of innovation, there are often few opportunities to examine current legacy…