CBME: Designing for the outcomes you want

March 24, 2021

The most important part of embarking on a new data-centered project is having a clear understanding of what you want to know, not just at the end when your entrustment or competence committee is meeting, but also signposts along the way.

Now, this might change over time as you collect data, but without being clear on your desired end goal at the outset, you’ll struggle to design your assessment tools and processes as well as disseminate and make sense of the data you’ve collected.

This isn’t unique to competency-based medical education (CBME), but it becomes more critical, especially if you’re implementing workplace-based assessments (WBAs) with direct or supervised observations. I’ll focus on observable EPAs and WBAs here, as they come with some unique challenges because they’re learner-driven and more frequent.

We’ve previously talked about understanding the impact of assessing EPAs, thinking about considerations such as shifting the culture of assessment, and the concept of entrustability and entrustment-supervision (ES) scales.

Considerations when adding EPA assessments to your curriculum

When adding EPAs into the mix, ask yourselves the following questions to ensure you get the outcomes you want while maintaining data integrity:

- When are learners expected to be observed and assessed?

Not all EPAs can be observed effectively during encounters on clinical rotations. If your EPAs are being observed during simulation sessions, OSCEs, or other events, make sure your assessment form captures the appropriate setting so you can easily discern assessments in controlled circumstances versus assessment in a work environment. - How many observations are learners required to obtain?

Most programs ask learners to be assessed a certain number of times per week, rotation, or year, which needs to be communicated to both learners and faculty alike. Are there any additional requirements on setting, patient characteristics, or observer role?

Ensure that it is feasible for learners to ask for and be assessed on the number of EPAs you have assigned. Consider how many learners are rotating through a given clinical rotation and how many faculty are available to assess them. - Who needs to see the data? Who is monitoring if learners are getting the assessments they need?

Setting up your EPAs with this end goal in mind is critical, so information funnels upwards to the final review process. While learners are in the driver’s seat with requesting EPAs, programs often wish to review their progress so the final summative decision has enough data to be truly informed.

There’s also a built-in assumption that learners should see their own assessments and take on ownership of their own learning outcomes. - Is there a minimum score on the ES scale required for your entrustment or competence committee to make a summative decision?

Whether you’ve landed on a 2pt scale or an 8pt scale – and we’ve seen both – the requirements need to be clear: is it sufficient to be observed taking a history, or does that task need to meet a minimum threshold to be trusted or achieved? - What data does your entrustment or competence committee need to make a decision?

You’ve made the decisions about what data you’re collecting, so consider how your program wants to review that data. Are there narrative questions you want to easily pull out the feedback on? If you’re evaluating competencies and/or sub-tasks, how do they play into your decision-making process?

If you’re assessing competencies linked to EPAs, there may not be an overall and/or minimum score, and the competencies are more critical.

Supporting your CBME assessment framework from the beginning

These are important considerations when creating the architecture that will support your CBME assessment framework. The answers will determine how and where to create and collect your assessment data, how to structure permissions, and most critically, how to set up both your assessment tools and mapping lists to get the data you want and in the format you need when it’s time to make a summative decision.

Although the end goals of applying EPAs to your program or curriculum may evolve over time, approaching the first steps with a defined goal in mind can help you shape the structure and data requirements you need. Thinking these details through in advance will set you, your program, and your learners up for success and empower you to more easily make adjustments to evolve how you’re assessing and measuring competency in your program over time.

Related Articles

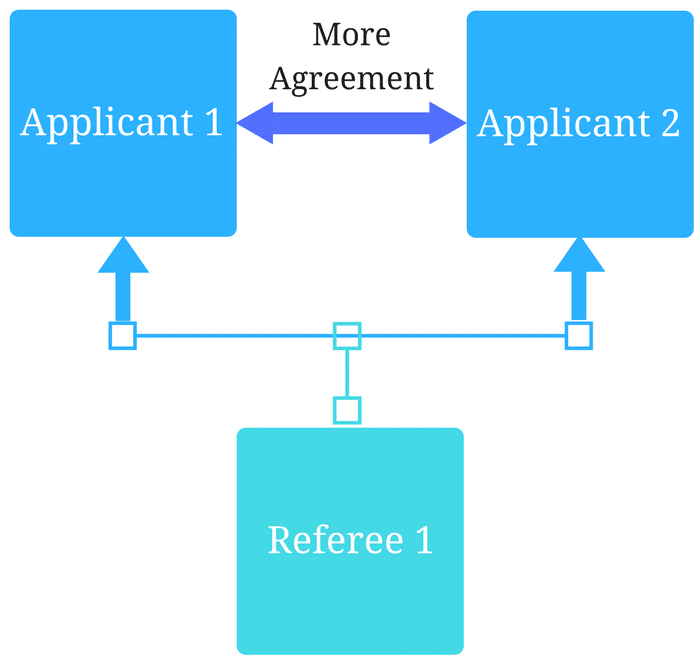

How interviews could be misleading your admissions...

Most schools consider the interview an important portion of their admissions process, hence a considerable…

Reference letters in academic admissions: useful o...

Because of the lack of innovation, there are often few opportunities to examine current legacy…