11 graduate medical education programs pilot a suite of professionalism assessments

February 12, 2021

“We used Duet to find additional candidates to interview. These candidates had lower step scores so we would have missed them otherwise, but with Duet we were able to interview 18 candidates that were fabulous!”

Dr. Donald Hess, Program Director of the General Surgery Residency, Boston University School of Medicine

In many ways, applying to residency training is complex and has competing needs. Applicants are still pursuing their education, but entry into a residency also marks the start of a person’s career in medicine. It’s this unique feature of graduate medical education (GME) that demands a new approach to selection.

Clearly, it’s not just about the grades. Just as any hiring manager balances a candidate’s technical skills and overall professionalism (such as their ability to lead, collaborate with teams, problem solve, and think critically), GME programs must also balance these different skills and competencies. After all, they’re looking for professionals, not just good students.

The cost of behavioral issues in medicine

Research has consistently shown how important professionalism is in healthcare. For example, 95 percent of disciplinary action reported to the medical board in the US was attributed to issues of professionalism. Another study involving surgical residency programs revealed up to 30 percent of all residents required at least one remediation intervention, costing programs up to $5,300 per episode. That same study also found that attrition rates for some specialties ranged anywhere from 20 percent to 40 percent.

Gaps in current approaches to holistic applicant review

Like many other higher education programs, GME selection teams lack reliable, standardized tools to support holistic applicant review. Things like personal statements and reference letters are cumbersome to review and do not predict future professional success. Instead, these programs may rely heavily on the United States Medical Licensure Examination (USMLE) Step 1 and 2 scores to filter and screen large numbers of applicants. The scores were never intended to be used this way, and now USMLE Step 1 has moved to a simple pass/fail, while Step 2 CS has been discontinued altogether. For osteopathic medical students, COMLEX-USA Level 1 is going pass/fail in 2022, while COMLEX-USA Level 2 PE is being suspended.

This poses a real problem for programs, especially since they’re inundated with a massive number of applications and have very limited seats to offer. To find the right match, they need more than just one tool to find the best applicants, and no single replacement for the USMLE and COMLEX scores will suffice. These additional tools can also support applicants, ensuring they can authentically portray all aspects of themselves to programs.

Piloting a new suite of professionalism assessments

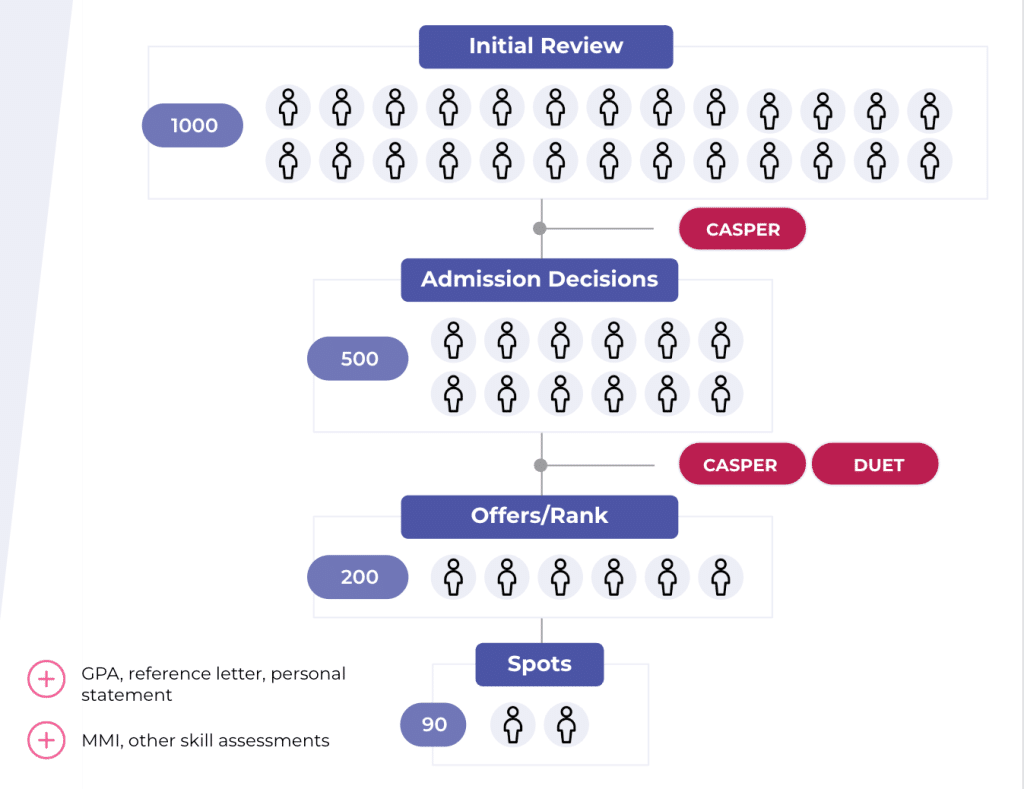

Recognizing these gaps, Acuity Insights decided to design a complete set of professionalism assessments that GME programs could use at multiple points throughout their selection process. Acuity conducted a pilot to evaluate these assessments and collaborated with the NBME in a research partnership to assess their utility. The three assessments include:

- Casper, an online, open-response situational judgment test that measures applicants’ professionalism and social intelligence

- Duet, a value-alignment assessment that helps programs find applicants who are the best match for them.

Built on years of research, these standardized assessments offer metrics that can be easily used to inform decisions at each stage in the selection process. Casper alone has demonstrated predictive validity for predicting performance on the Canadian licensure exams, which can be used as a surrogate for non-cognitive performance in the profession, while Duet has been designed based on best practices, including input from stakeholders across the selection process.

Once these assessments were developed, Acuity began piloting them in the 2020/2021 academic cycle with 11 residency programs to study the feasibility, acceptability, and psychometrics of the entire suite. Programs asked all of their applicants to complete the assessments concurrent with the opening of the Electronic Residency Application Service for the 2020/2021 cycle.

Program participants

| OB-GYN | Internal Medicine | General Surgery | Family Medicine | Psychiatry |

|---|---|---|---|---|

| NYU Langone Health | Parkview Medical Center | Boston University Medical Center | Hamilton Medical Center | Temple University |

| University of Texas Rio Grande Valley | Hamilton Medical Center | UConn Health | ||

| University of Michigan | UConn Health | Florida Atlantic University |

The results and feedback from programs and applicants

Over 5,000 applicants completed the suite of assessments as part of their applications to participating programs, and they generally provided positive feedback on their experience in their exit surveys. In terms of technical feasibility, only six percent of applicants experienced a technical issue and all but one were resolved immediately.

Applicant acceptability for Duet, the newest assessment, was comparable to Casper. Applicants generally found Duet to be a great addition in terms of programs and applicants finding alignment, with one applicant noting in their exit survey that it was “a good way to gauge what you find important and compare that to what the program thinks is important.”

Applicants also noted in their exit surveys that the different assessments provided multiple opportunities to showcase unique personal and professional qualities beyond their standard application. One said, “I think it is a well-rounded form of testing the candidate on a personal level,” while another shared that “if this brings programs to focus more holistically on applicants beyond just grades, then it is worth it.”

Feedback from participating programs was also positive. For Duet specifically, programs liked having a tool that could really help them assess applicants for “fit.” This is because characteristics of one GME program differ greatly from the next, whether it’s the size or location of the program, availability of research opportunities, or patient population.

Dr. Donald Hess, Program Director of the General Surgery Residency at Boston University School of Medicine shared that the team used Duet to find additional candidates to interview. “These candidates had lower step scores so we would have missed them otherwise, but with Duet we were able to interview 18 candidates that were fabulous!”

The surgery residency program at Florida Atlantic University found value in reviewing Casper scores. Dr. Thomas Genuit, the program director said, “it was interesting to see that there were quite a few candidates who looked good on paper, but when looking at their Casper scores some potential red flags were revealed. When we’re trying to find well-rounded applicants, those personal and professional attributes really matter.”

The OB-GYN residency program at the University of Texas Rio Grande Valley used all three assessments in their algorithm to decide who to interview and to rank applicants on their waitlist. While all three assessments were valuable, the strong psychometrics of Casper led the team to weight Casper scores higher than Duet scores to inform interview selections.

Dr. Salcedo said, “With our very limited interview spots, we did end up inviting several applicants to interview in large part due to the Casper scores. They really blew it out of the water with their scores, placing in the 90th percentile or higher – and these were individuals who otherwise would have been on the waitlist.”

In future rank meetings, Dr. Salcedo expects that Casper and Duet scores, along with board scores will be pretty popular points of discussion and inform decisions on who to move up or down that list.

All in all, participating programs found that each assessment revealed unique qualities of applicants that they wouldn’t have otherwise known, and helped them to consider more than just their cognitive competencies in their high-stakes selection decisions.

[cta cta_id=”15855″]

What’s next?

Based on the positive feedback from both programs and applicants in the pilot, All three admissions assessments are now available to all US GME programs, but with some notable improvements. These include:

- Refined categories and characteristics in Duet to ensure the characteristics help differentiate programs even further for matching purposes.

- Earlier implementation (with test dates prior to ERAS opening for programs) so programs have more time to use Casper and Duet scores throughout their selection process.

- Improved scoring guide to make it easier for programs to interpret and leverage all scores.